Department of Computer Science

Cognitive Systems Group

Event-Based Vision for Fast Robot Control

When a Table Tennis Robot meets Event-Based Vision

Andreas Ziegler

You can’t get enough of neuromorphic vision - Zürich Hackathon

ZHAW

2024-10-22

Our table tennis robot

Where it started (frame-based)

Our table tennis robot

Where it started (frame-based)

Our table tennis robot

How it continued

Sony AI was interested in the application

A good use case to evaluate event-based vision

- Fast processing time (\(1\)s - \(0.1\)s) is crucial

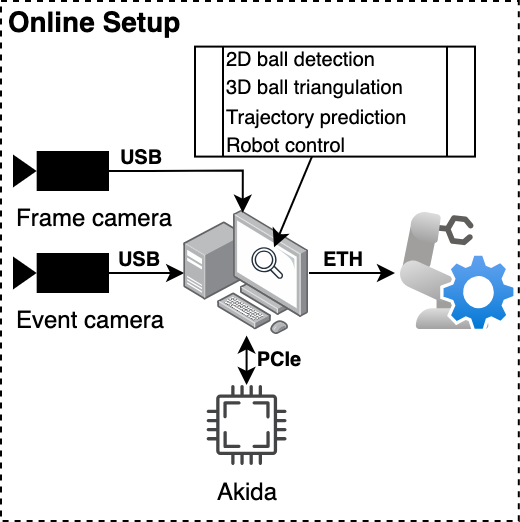

Our table tennis robot

The setup

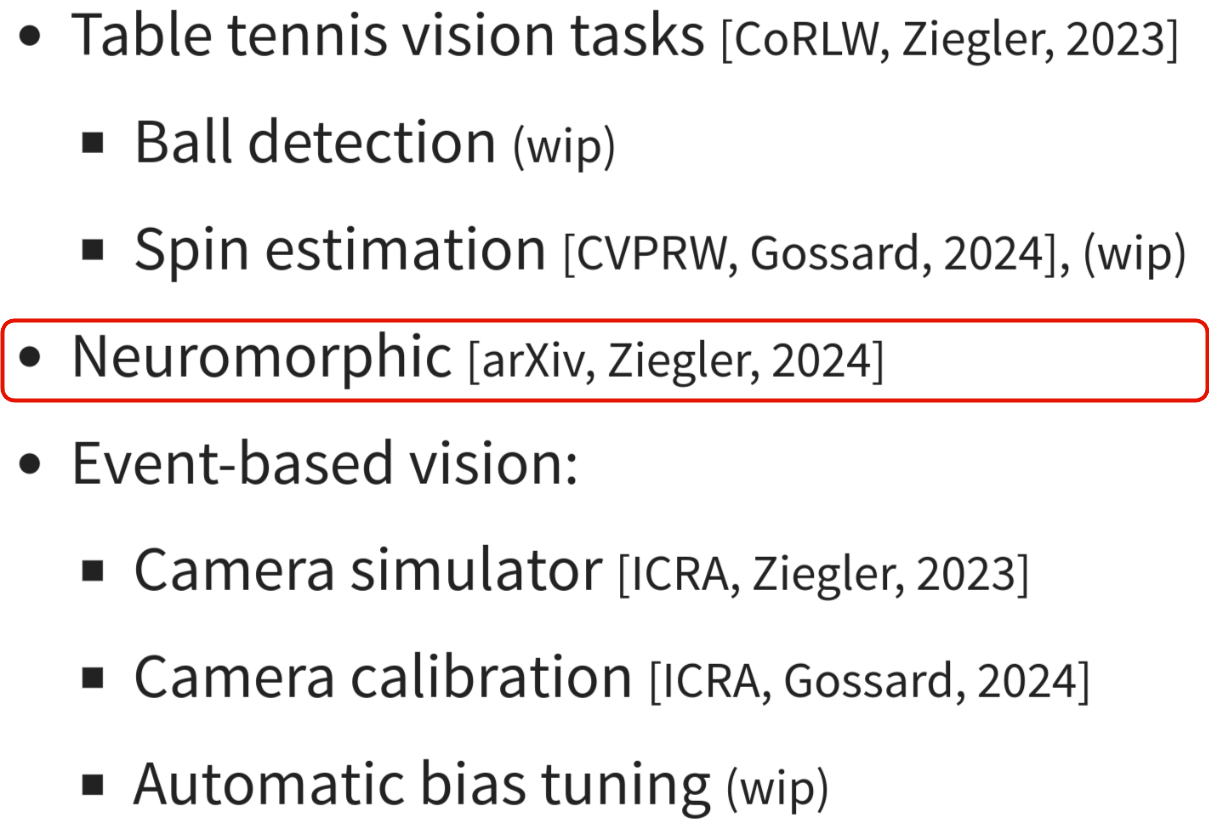

My research

- Table tennis vision tasks [CoRLW, Ziegler, 2023]

- Ball detection (wip)

- Spin estimation [CVPRW, Gossard, 2024], (wip)

- Neuromorphic [arXiv, Ziegler, 2024]

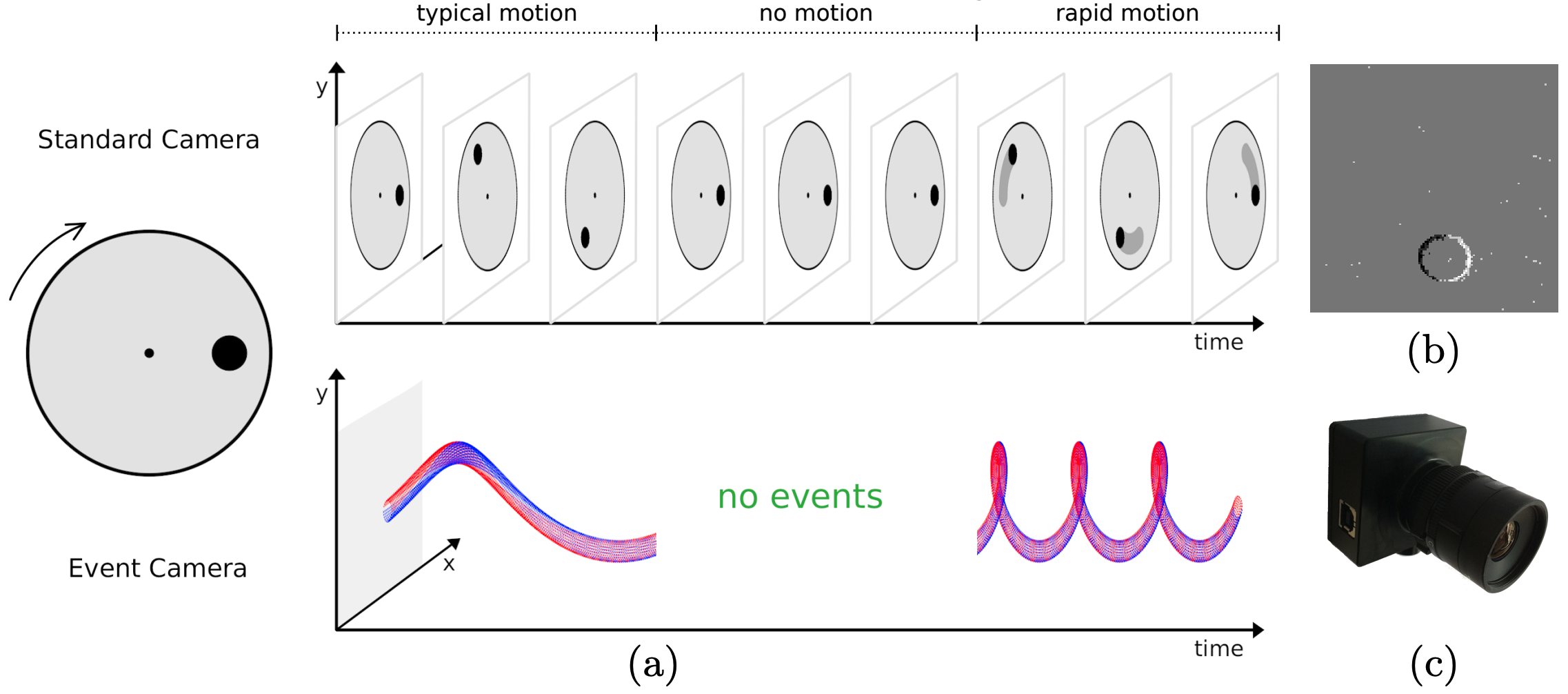

- Event-based vision:

- Camera simulator [ICRA, Ziegler, 2023]

- Camera calibration [ICRA, Gossard, 2024]

- Automatic bias tuning (wip)

Today’s talk

Fast-Moving Object Detection with Neuromorphic Hardware

Andreas Ziegler\(^1\), Karl Vetter\(^1\), Thomas Gossard\(^1\), Sebastian Otte\(^2\), and Andreas Zell\(^1\)

\(^1\) University of Tübingen, \(^2\) University of Lübeck

https://cogsys-tuebingen.github.io/snn-edge-benchmark/

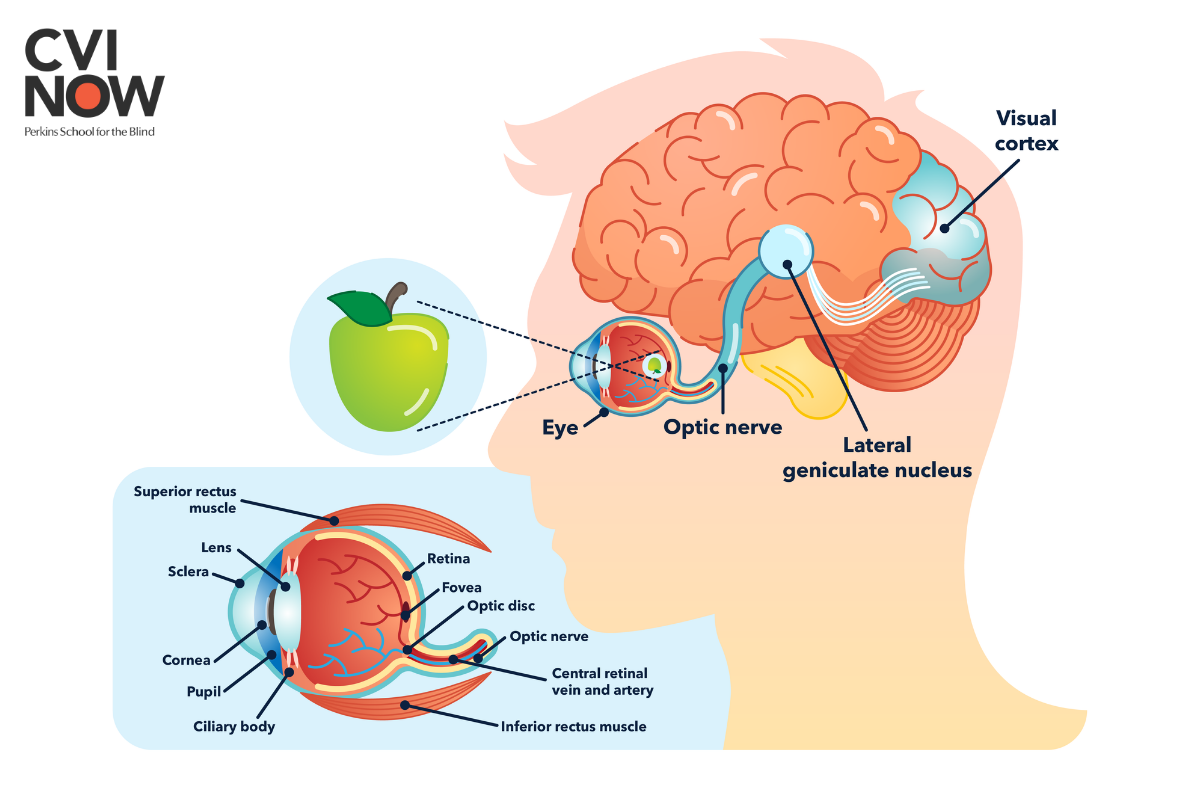

Do you see the difference?

Visual Cortex:

- Power consumption: 2-3 Watt

- Performance: \(10^{16}\) FLOPS

Neural Networks:

- Power consumption: 200-300 Watt

- Performance: \(10^{12}\) FLOPS

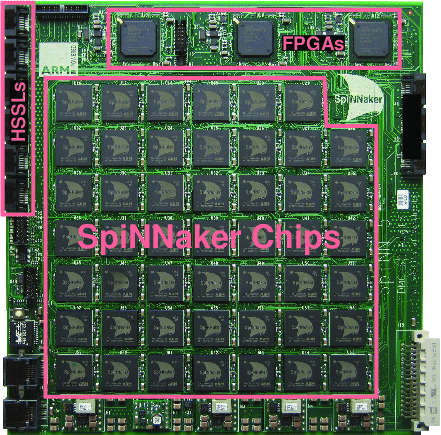

Neuromorphic Computing

The next generation of Neural Networks

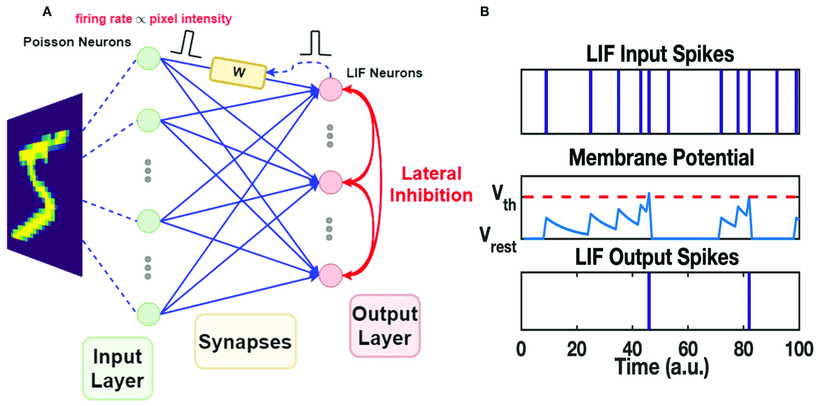

Spiking Neural Networks:

Event-Based Cameras:

Neuromorphic Computing

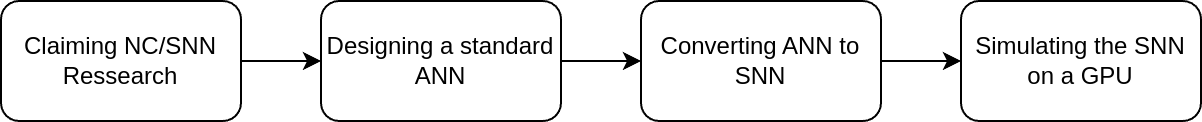

What \(>90\%\) of research is doing

Neuromorphic Computing

What \(>90\%\) of research is doing

But this is really inefficient

Neuromorphic Computing

for Robotics

Nice, but what about the specs?

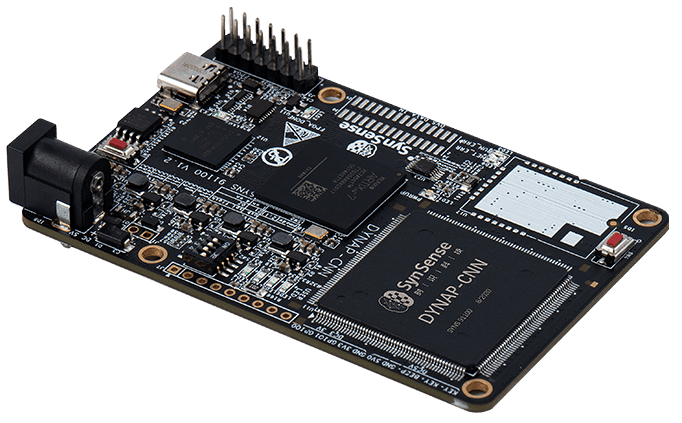

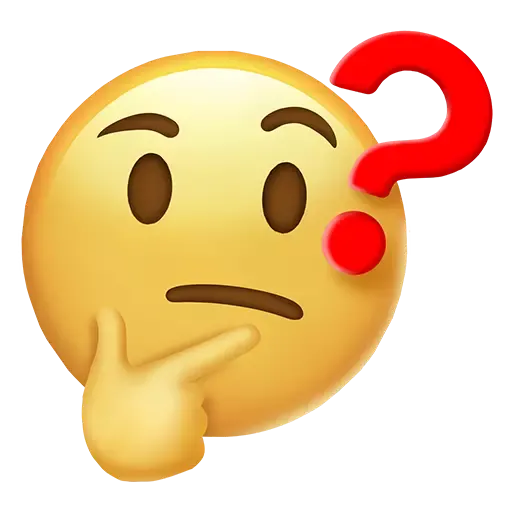

DynapCNN Specs

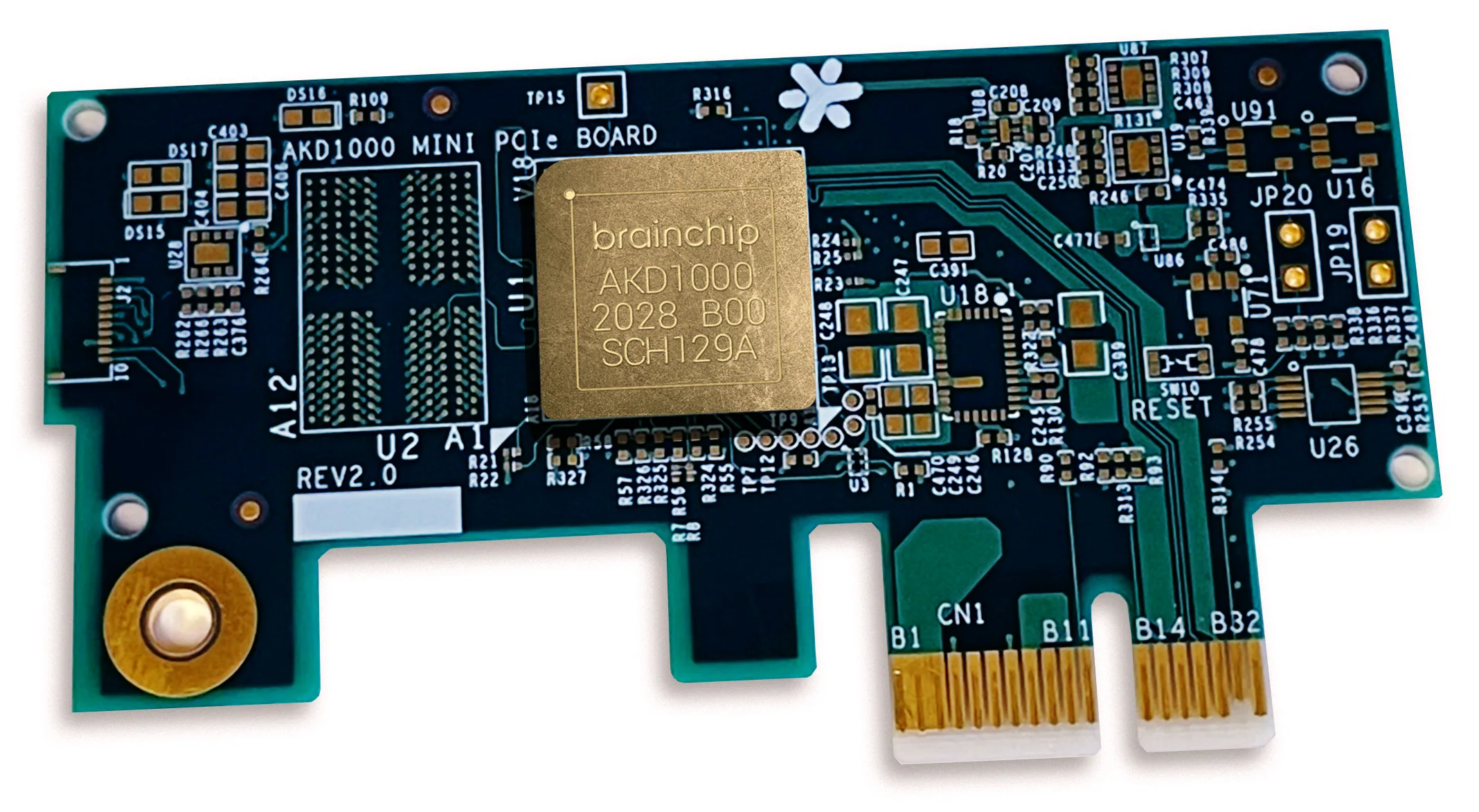

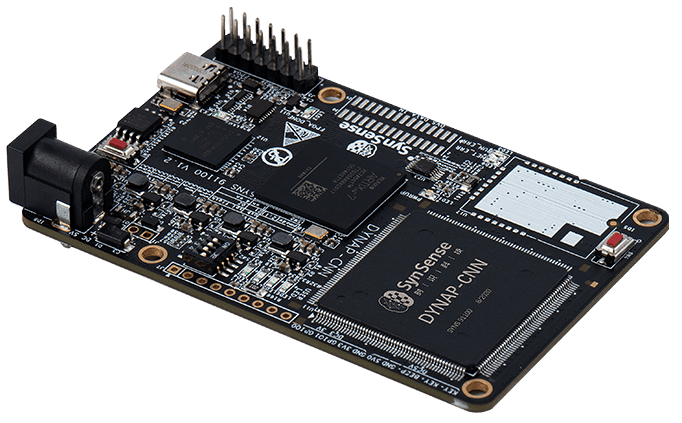

Akida Specs

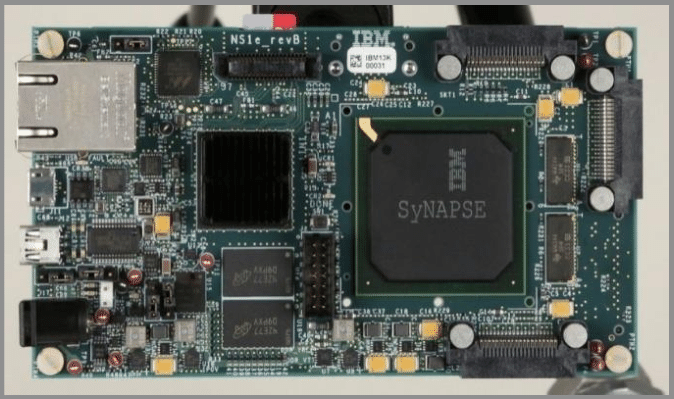

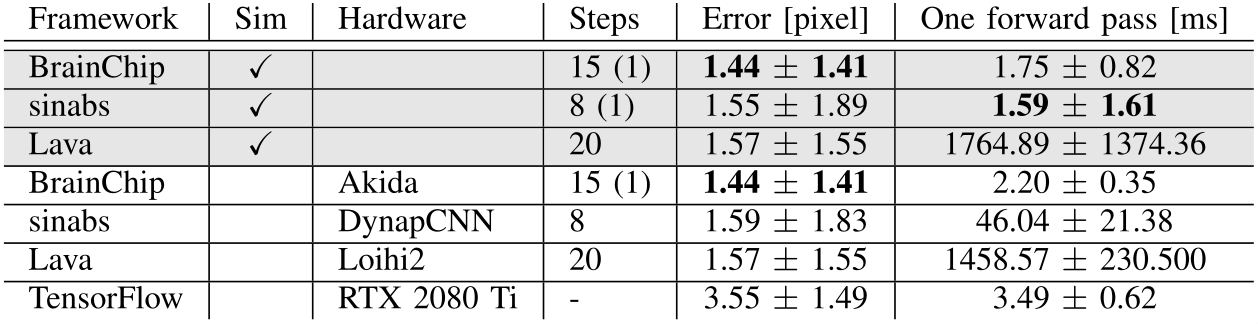

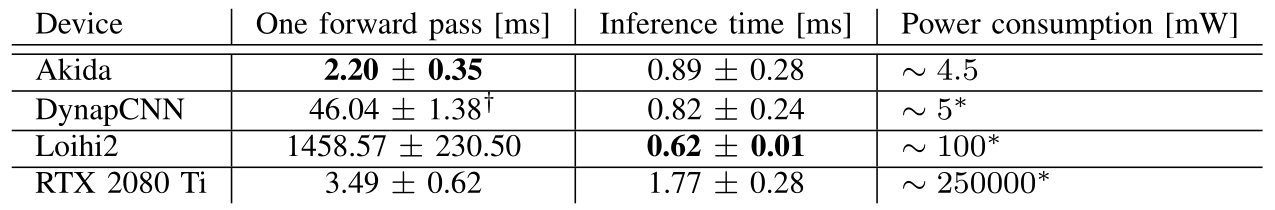

A Benchmark of NC Hardware

We compare…

- the inference time

- the time per forward pass

- the power consumption

of the

- SynSense DynapCNN

- BrainChip Akida

- Intel Loihi 2

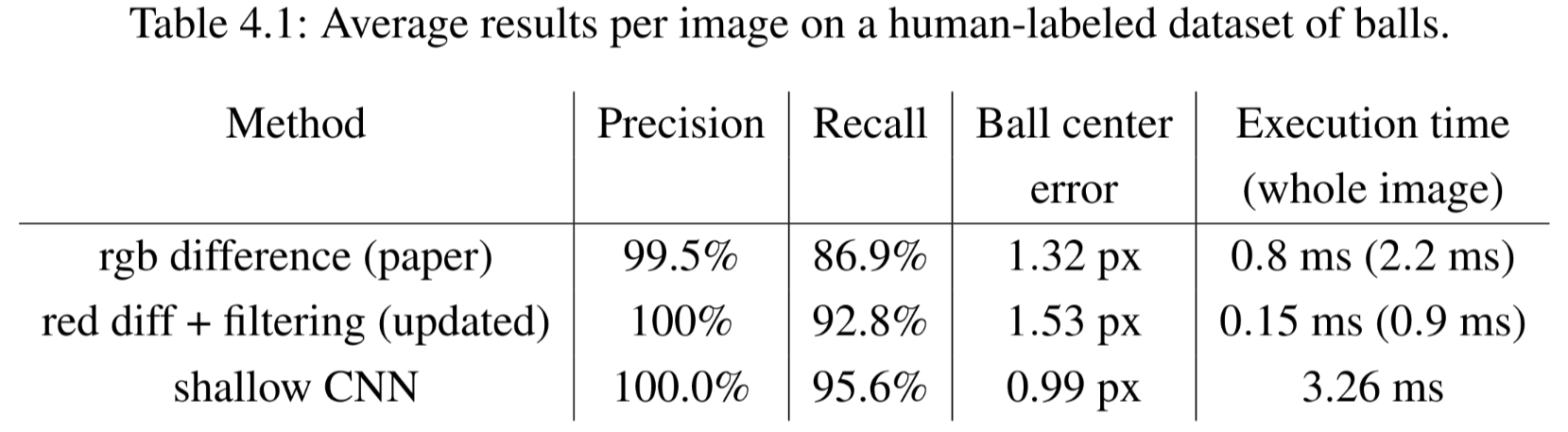

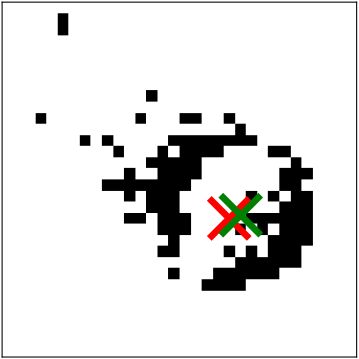

The benchmark task

Ball detection

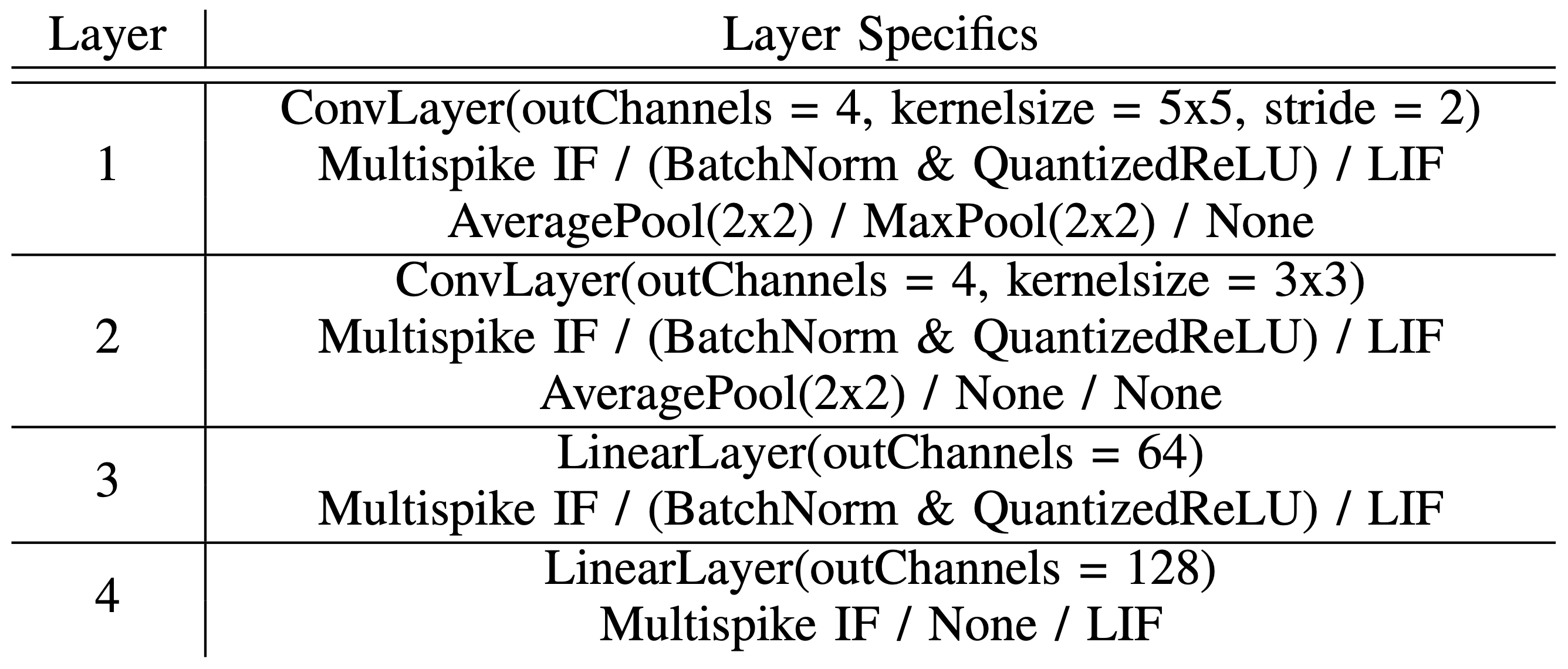

The networks

Three SNN frameworks, three architectures …

DynapCNN (sinabs) / Akida (MetaTF) / Loihi2 (Lava)

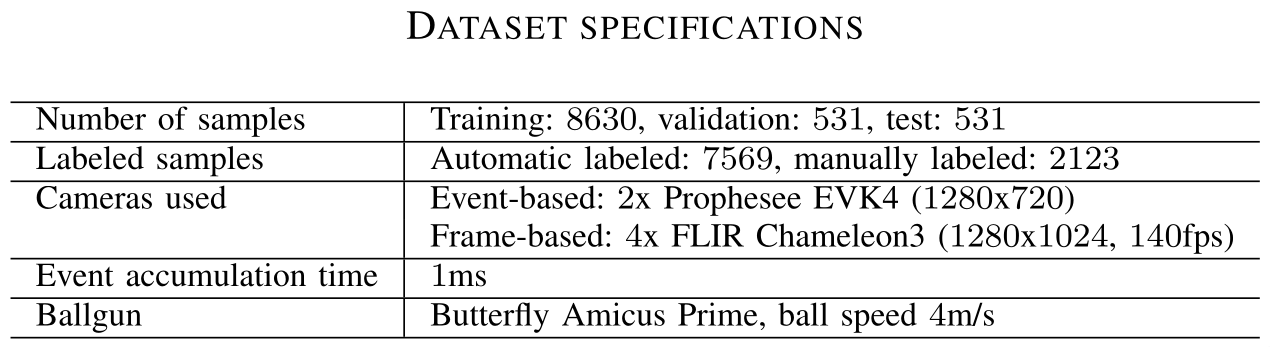

Machine Learning needs data

We project 3D points from the FB pipeline into the EB camera frame

Some more manual labeling

The benchmarking setup

The benchmarking results

Let’s get into more detail

Note: Hardware integration matters!

Now to the (real) robotics part

The whole setup in action

Conclusion

NC and SNNs are promissing for robotics

However, hardware is still in its infancy

Hardware integration is key to make it usable

Thanks

The organizer for the workshop and the invitation

Sony AI for funding the project

The Cognitive Systems Group for the infrastructure

My co-workers, students and colleagues:

Thomas Gossard, Jonas Tebbe, Karl Vetter, David Joseph, Emil Moldovan and Sebastian Otte

Let’s stay in touch and collaborate

My topics:

- Real-time object detection

- Object detection in clutter

- Ball spin estimation

- Automatic bias optimization

- Event-based vision for tactile sensing

Email: andreas.ziegler@uni-tuebingen.de

Event-Based for Fast Robot Control - Andreas Ziegler